Have you heard of Google BERT or ALBERT? Brath explains Google’s new neural networks

Caroline Danielsson

Caroline Danielsson

Google is constantly making changes and updates to its algorithms. Most of them are so small that Google does not even announce them—they are simply part of the company’s daily work. From time to time, however, larger updates appear: core updates and new features that are given their own names. These are the updates and changes that SEO professionals are most interested in. One of the latest named changes is called BERT.

BERT is an acronym that stands for Bidirectional Encoder Representation from Transformers. It is a highly technical term that essentially means Google has found a new way to understand natural language and enable the search engine to learn on its own. At the moment, BERT has not been rolled out in all languages, but it is only a matter of time before it is implemented in Swedish Google as well.

BERT has actually been a major topic in language technology circles for about a year, but it is only now going live on Google Search. It is also worth mentioning that BERT is open source—the code is completely free for others to use in their own systems.

According to Google, BERT will affect up to 10% of all searches, but the question is whether it really makes a big difference for you as a website owner.

How BERT works

The first word in the acronym gives us a clue as to what it does: bidirectional. A common way to teach a computer language is to process words in a linear sequence, either from left to right or right to left. Bidirectional language learning means that the system uses all surrounding words to determine the meaning of a sentence.

For example, the Swedish word “tomten” can mean either Santa Claus or the plot of land where your house stands. In the search query “how is Santa feeling after Christmas,” humans can easily understand the meaning through context. A one-directional language model can only interpret “tomten” based on the words that come before it. As a result, you might get both search results about Santa’s BMI and articles about winter lawn care.

A bidirectional system like BERT can also take into account the words that come after “tomten.” When it sees the word “Christmas” in the query, it better understands the context and provides information about Santa’s wellbeing after the holiday rush. BERT reads the entire sentence at once and connects the words to each other.

BERT is a complement

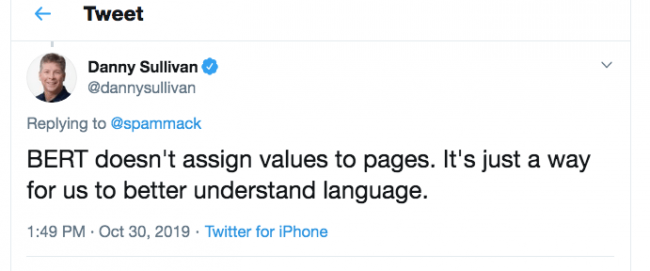

BERT does not replace RankBrain, Google’s first major language-learning system. Instead, they work together. BERT is particularly helpful for unusual or highly specific search queries, especially those where the interpretation of prepositions changes the intent of the query.

Improved understanding of search queries

One of the most important parts of Google’s search engine is understanding what a query is actually about. To display the best results, the search engine must learn language and interpret it correctly.

Example: If you search for “build a wooden wall,” the search engine is very good at understanding the intent. It shows websites explaining how to build a wooden wall. However, since you have not specified whether it is an exterior or interior wall, both may appear.

But let’s say you search for “build a wall without wood.” In this case, you are looking to build with concrete blocks or steel studs and drywall, yet previously the same wooden wall articles might still appear.

In the past, search engines placed too little emphasis on words like “without” and other prepositions. Instead, the query was interpreted as “build wall wood,” producing results related to wooden walls. That may now be a thing of the past thanks to BERT, which understands the meaning of “without” and filters wooden wall content out of the results.

Beyond that, BERT has achieved top results in several language processing tasks. It can perform sentiment analysis, determining whether text is positive, negative, or neutral. BERT understands entities such as concepts, colors, people, and objects. It has performed well in textual inference tests—understanding implied meaning or predicting what comes next. It can also assign correct semantic labels to different parts of a sentence, such as identifying whether something represents an action, an agent, or a result.

BERT is already old news – ALBERT is here

While most of us have only just heard about this new technology, for tech giants BERT is already old news. Since the code is fully open source and available for anyone to download, many large companies have created their own versions of BERT. Some newer models have even achieved better benchmark results than the original. Microsoft’s MT-DNN, for example, has received higher scores.

Google itself has already begun building on BERT together with Toyota’s research team and has created its successor: ALBERT. ALBERT is faster and more efficient than BERT and addresses some of the issues found in the original model. There has been no official announcement that ALBERT will replace BERT, so for now BERT remains the primary model, along with its compressed variants such as DistilBERT and FastBERT. Most likely, BERT has already been rewritten to include improvements introduced in ALBERT.

How do you optimize for BERT?

The short answer is: you don’t. Optimizing specifically for a new application has little value. BERT exists to help your site appear more accurately for relevant search queries.

One advantage for SEO practitioners is that you no longer need to worry about linear keyword thinking when targeting long-tail queries. You no longer have to sacrifice good grammar or content quality to force awkward keyword phrases into your text.

Instead, you can focus entirely on doing what both users and Google want: producing and sharing high-quality content. Most people will not even notice BERT. The only potential impact could be a slight decrease in click-through rates, as BERT delivers more precise results—meaning users may not need to click through multiple sites to find what they are looking for. BERT may also influence featured snippets.